Bits for life

How long can a bit live?

This question is both nonsensical and serious at the same time. The base concept of a bit says it doesn’t have a life time so much as it encodes a hint at the probability distribution. In real life though we use those bits to encode almost everything today. Looking around my house I can see some ancient technologies that encode analog values (tapes and records) or symbols (books). Only the books and bits are still in production and the books are declining fast.

This concept of how long you could safely encode a bit has been around for a long time. When CD-ROMs first came out many people (myself included) thought it would be a miracle archive media. We soon learned that some of the manufacturing choices made meant they would degrade based on environmental factors. Within a few years of introduction we were already worried if they would last for a decade. We have had this same problem with every storage technology.

In all cases the expected life of a bit is probabilistic and heavily dependent on the environment. In all cases I can find there is an estimation of the error that would creep in over X time and Y conditions. Nothing is permanent, but we can choose a technology and control the environment some to meet most needs.

No single bit is by itself though. For information to be preserved we need enough bits to survive to recreate the information in the future. If I have a file with 10,000 bits (small by today’s standards) and if any one bit dies I can’t recreate the information (an extreme example, but not far fetched), then I have to worry about the survival of any of those bits as the survival of the file itself. If one goes bad every 10 years on average, the file will die every 10 years on average.

Error correction and file formats that are resilient can help a lot, as I may need to loose 1,000 of the bits to not be able to recover the file. Also, maybe a lost bit in the end doesn’t bother me as much as one at the beginning. In these cases each flip of the dead bit coin is less likely to be serious as a number of other bits also have to die.

In the end I am worried about the probabilistic life of the bit and how long it can last by itself and in a larger group. This is the key to long term preservation.

Why am I thinking about this?

Well, I am more and more concerned with the preservation of knowledge. That includes personal information (pictures of my family, financial information, etc), work information, and the information that makes society work over time. We have seen what happens at each of these levels. Families can have a dramatic event that wipes out large swaths of memories from everywhere but their brains (wildfires, floods, tornadoes, and even just a computer failure). Businesses get into trouble all the time for loosing information. Societies loosing information have terms like “dark ages” associated with them. It is bad all around when a bit failure occurs and the scale of bad can be fairly disastrous.

When information, knowledge and/or wisdom is lost it means problems will be relived. This is a waste of human effort and in many cases can be wasteful and a result in serious disasters. It isn’t new either. We can see it in quotes as history has become better preserved.

“Those who don’t know history are destined to repeat it.”

-Spanish philosopher George Santayana

or the more accurate take on it…

“History doesn’t repeat itself, but it Often Rhymes”

-Mark Twain

What this means is that for society, or even subsets of society to flourish and progress we need a way of preserving and passing on knowledge and wisdom at a minimum, and data in the best case.

Looking around I think we had a firm grasp on how to do this up until the last 50 years or so. Before the 1970s, all knowledge transfer involved books as the long term preservation. Around that time more and more was switching to other media. Music and art became more performed than it could be described in representations like sheet music. Data started to become larger than a print out and more important than the general descriptions. Worse, many descriptions started to proliferate and were often wrong. Without the data a savvy reader just had to choose which opinion or misinterpretation to believe.

What are the options?

There are almost too many to choose from to be honest. I’ll start with the most common and work my way to the most able to preserve bits.

Tape

Just for the record I’m going to mention both types of tape. First, paper tape. Think of this as a really long punch card. If the paper is stored well, those holes don’t degrade, but reading the tape can get dicey as the tape ages and gets fragile. You could always have an optical scanner for the holes, but even flattening it out can cause it to fall apart. Every time I have used or seen paper tape used it has broken, and none of those were old tapes.

Magnetic tape is still used all over the world for long term archiving of data. Often it does have a long life, but not from a practical perspective. The problem is that it can be a mess to work with and store. Formats change and the tape itself can fail for a number of reasons. Old reel to reel version like 7 track have become so rare that when NASA found important data stored on it they had to make a public plea for equipment to read them. The newer 9 track drives are still available in some places, but are disappearing fast. So much scientific data has been kept on these tapes that much of it may have been lost to time and unfortunate events like floods.

Many formats have come along that have solved the

problems with reel to reel by placing the tape in

cartridges. We’ve had QIC (Quarter Inch Cartridge) formats

like, QIC-11, QIC-24, QIC-120, QIC-150, and QIC-40.

Drives for one might read one other, but in many

cases the cartridges aren’t even the same shape.

There was 8mm and 4mm DAT drives that stored tons

of information for their days and disappeared almost

as quickly as they arrived. You may start seeing

a trend.

The tape community has seen fit to answer these problems with a cartridge system that is backwards compatible and designed to reduce the parts that could fail in the cartridge. This has become the Linear Tape Open (LTO) standard. It uses the highly successful mechanics of the DLT that had seen success for 15 year prior.

LTO tapes and drives are now available as LTO-9 which holds 18TB of data. The standard is already planning up through LTO-12 which can store 144TB when it is released. Unfortunately these drives can only read from their current generation and 2 previous generations. So if you want to read a tape from 2013 you need to have an older drive than what is made today. This makes this format only really good for 10-20 years as the drives will fail as the rollers dry up and lubrication spoils. I’ve never seen an LTO tape drive that is more than 10 years old still working. The tapes are only designed for 17 years with 1 write a month knowing this.

Scary, but of this class of storage, the 9 track reel to reel probably has won the longevity trophy without ever trying.

Drives

If you are my age, you know that drives are rotating magnetic media that came in 2 flavors. Floppy and hard. If you are young you may think of drives as anything that holds data. We will be using my generation’s definition here.

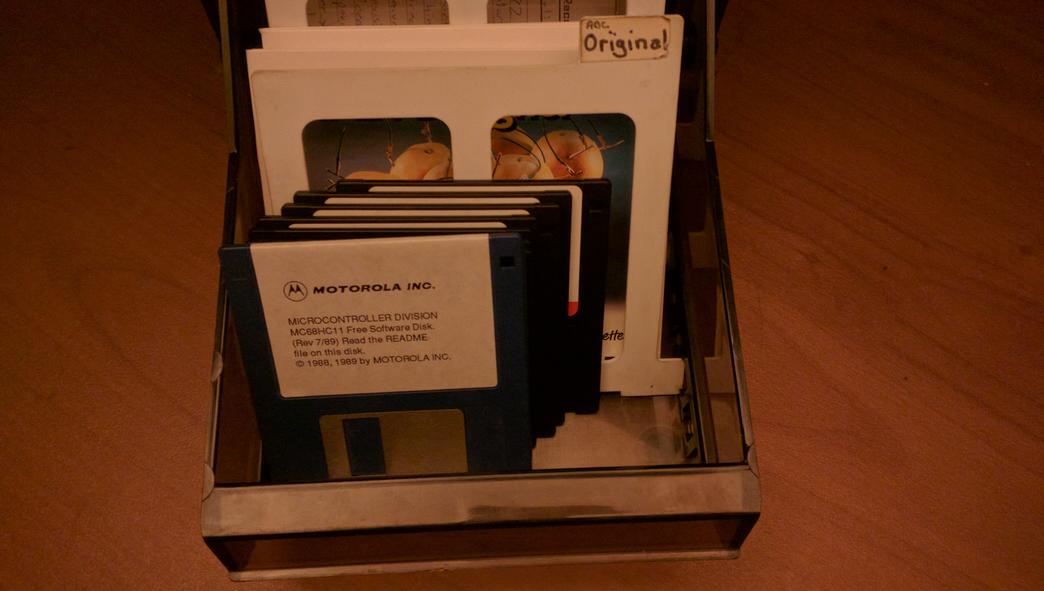

Floppy drives were everywhere in the 80s and 90s. Many computers could only store data on floppy drives as all other storage was too expensive or too slow (sometimes both). We had 8", 5.25" and 3.5" floppies. Each of these could hold millions of bytes, but stories abound of floppies that never worked again after any one of problems, the most common was being placed near a magnet.

No one ever considered these long term storage or a suitable archive media. Oddly, I still can read most of the floppy disks that I could read 25 years ago. The drives are fairly simple and I have learned how to keep the discs safe. I have floppies that I can’t read, and I have copied all the data I have on them to my file system, but reading floppies from 30+ years ago tells me it is one of the better archival formats we have talked about yet. That is not a vote of confidence in floppies as much a vote of condemnation for everything else.

Hard drives are still in use today. You either have one in the machine you are using now, or you have a newer technology that emulates one. These had rigid platters that spun as a head floated above, reading and writing data. For the drives to hold enough data to be worth buying the head had to float so close that a speck of dust would not fit under it. These drives had to be sealed and have precision machined parts. While I have been able to read hard drives after 20 years of sitting, there have been more that died in under 5 years.

Hard drives are still one of the most economical and easy to use storage media. There are many long term storage projects that just assume that failure will happen and have redundant drives to prevent data from being lost. This has worked very well, but I’m dubious as the standards for hard drive interfaces is a story much like tapes. Sure we all use SATA or SAS today, but who remembers MFM, SCSI and IDE? It wasn’t that long ago that we used each of those, but I’m the only person that I know that can still read all 3 (and I’m getting dubious about MFM).

Flash

Flash is the most common Solid Sate Drive (SSD) that we have today. It really is a wonder of modern technology. It is an outgrowth of Erasable Electronic Programmable Read Only Memory (EEPROM), where a bit is recorded as a charge on a capacitor. The input to the reading transistor (a MOSFET) is such a high resistance that the capacitor will hold the bit for years. Flash was a huge step forward in providing a way to quickly erase and rewrite small banks at a time.

Modern Flash devices are some of the most dense ICs we have today. Each device has a controller that juggles what gets written and what gets wiped and moved to make it feel like a read and write device. Early on these write cycles could be counted in 1000s before the device was no longer reliable. Modern versions have much 10-100x the number of cycles and they are managed by the on board controller to keep the whole device reliable in all but the most abusive use cases.

Flash is fast, dense, low power and seems so easy to use, but it is a little more probabilistic than any other media we have talked about so far. I haven’t tested any SSD past 15 years, but I have had many fail before that. The current crop seem more reliable, but I wouldn’t bet more than 5 years on any one of them to survive.

Optical disks

CDs, DVDs and Blu-Ray have been the back bone of optical storage for the last 30 years. I mentioned before that each one of these can degrade if not taken care of, but one neat feature is that a modern Blu-Ray reader can read any of the formats and write to most of them. That is one of the more impressive compatibility stories so far. Especially given the drives are dirt cheap.

The original CD-ROMs were mechanically pressed on metal attached to glass of plastic. These may hold data for centuries if they are cared for. As the demand for home writing and storage increased the discs went from a burned hole (which might store data well) to reversible dye in a glass or plastic substrate. As the discs became more flexible in how they could be written, the longevity suffered. Depending on the formulation of the media data can survive from 10 years to over 100 years. I have not had a disc fail in storage for any reason other than mechanical damage in 30 years.

Overall, these optical discs are the best option so far. Long media life, drives are very backwards compatible, and the storage density is relatively high. I don’t know how long the drives will be made though as most computers can’t be bought with one today and the number of drives made will probably drop to 0 over time.

Other options

So far we have seen options that vary in ease of use, density and longevity, but all of them require an external reader to be useful. That might be the biggest limiting factor. Right now we can encode bits in DNA strands with incredible densities and longevity, but will a dark age be able to read any of these, let alone DNA?

We need something that is easy to read, has a decent density and longevity. This is what I would like to look at next.

ROMs

One of my favorites is the plain old simple ROM. Put an address in and out pops a word of data. Any computer working with voltages could be programmed to use them if the voltages are adjusted. They have very little that can fail and I have many devices that rely on these old ROMs that work just fine many decades later.

The problem is that they are hard to make. Unlike Flash, a ROM has to be custom made for each data set stored. While the densities can be as high as Flash, the cost to lay it out makes each run of a particular ROM measured in the millions of dollars. Maybe I can sell enough copies Wikipedia to justify it, but it is a gamble.

There are programmable ROMs where fuses are blown or laser cut. Density suffers and the demand is low so no one has worked on increasing density beyond the minimal amount needed to boot a small device. It is just easier to use a Flash chip and let the user throw the item away when it fails.

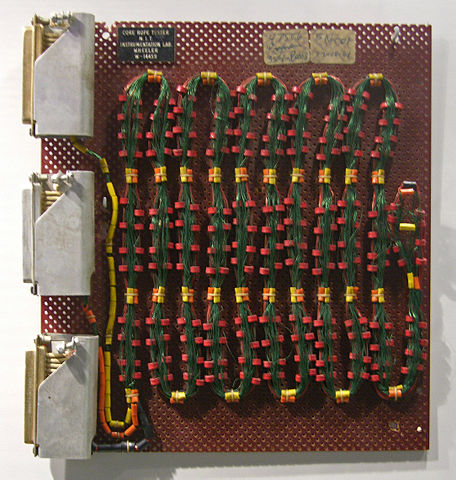

If I’m worried about the silicon becoming unreliable over time (charge does migrate making gates prone to failure over time), there is a mechanical ROM known as Rope memory.

This is wire that is sewn in and out of iron beads to encode 0s and 1s. In theory this could be put in a block of acrylic and last for a very long time. I can make it at home, but the density is low. This is the type of option that you might use when your data can’t be lost and that is why it was used to store the programs for the Apollo flights.

Printing

We know that printed books can last centuries with fine details. How much data can we store on a printed page? Well with a modern laser printer or ink jet we can store hundreds of KB per page. Neither of those will last forever though (both stick to other pages and suffered from lost material), but it isn’t difficult to get a well printed image of bits on acid free paper in a properly bound book.

There are multiple formats for doing just this. Paperback is a software solution. Xerox invented Dataglyphs that encode the data in images and background coloring. Microsoft has played with some alternate encodings around the use of colors and shapes. You can also print 2D bar codes on a sheet of paper and many of them support having them side by side.

Given that all of these just require the reading of an image and some simple software, they have a modest density, but high likelihood of survival. Paper can be fragile, but we have figured out how to keep it around for centuries at this point.

We can also etch the same patterns into another material. I’ve joked about carving into stone in a post from almost a year ago. It’s is only half a joke. We could drill or acid etch the same patterns in stone. There are companies doing this in quartz which could encode huge amounts of data and last for 1000s of years. Just good luck on being able to read it when you need it. The quartz will make a nice paper weight in the mean time.

Final thoughts for now

I think paper and etching are the best options at the moment for the problem I’ve outlined. Neither really strikes me as a great option, but you work with what you have and look for what you might find later.

There are some gaps still. I think there needs to be multiple things done still. We need an archival format that is easy to work with. Software descriptions, or even better language that can span time to read the format. Finally a way to convey all of this to someone that will benefit from reading the bits laid down.